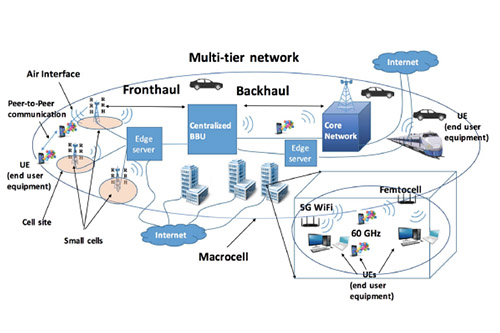

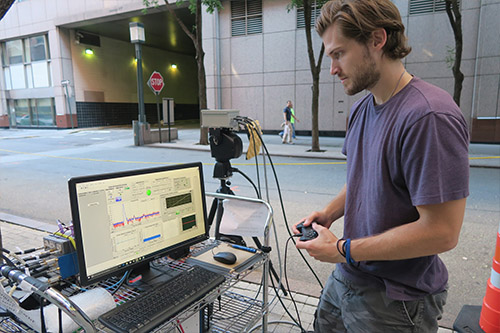

VIS4ION works by using miniaturized sensors—including cameras, microphones, GPS, and motion sensors on wearable devices—to collect data about the user’s environment. Artificial Intelligence (AI) services running locally within the platform and remotely in the cloud process the sensor data to interpret the environment and tell the user where to walk, what to avoid, and how to maneuver through hazards.

“People with blindness and low vision have unacceptably high unemployment rates, with some studies showing levels at about eighty percent,” said J.R., who is the project’s Principal Investigator (PI). “A critical obstacle to employment is commuting difficulties and navigation within the workplace itself. This project takes a fundamental step in solving this problem. If successful, we believe it will significantly improve pBLV’s quality of life and unlock their potential to contribute fully to the communities in which they live.”

In 2022, the NSF awarded the team a Phase One Convergence Accelerator Grant to support its early VIS4ION work. These highly competitive grants fund research to address societal challenges. The team’s promising preliminary results prompted the NSF to advance the VIS4ION project to its Phase Two funding round.

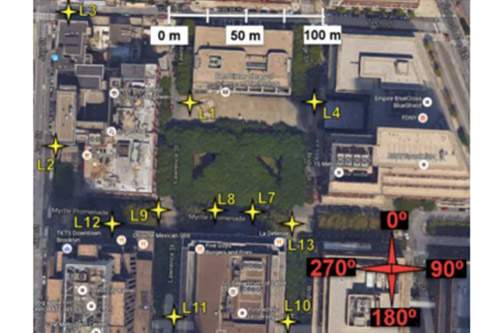

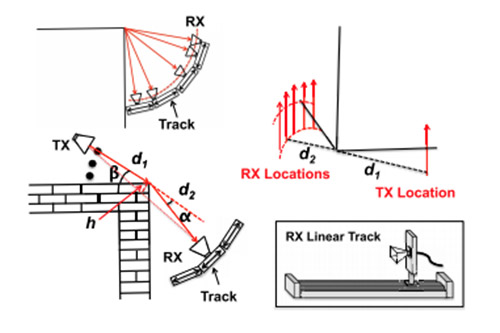

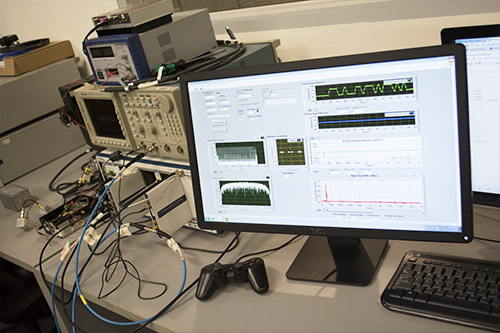

During Phase One, the researchers successfully built a lightweight wearable VIS4ION backpack prototype with cameras, on-board processing, and audio and haptic feedback. They subsequently demonstrated that the backpack could be used for safe navigation through a variety of environments. The team also developed VIS4ION mobile, a stand-alone platform for use entirely on a smartphone. The signature microservice—offered on both the wearable and mobile platforms—is UNav, a software that creates camera-based, infrastructure-free “digital twins” or 3D maps of complex indoor and outdoor environments, supporting wayfinding to close- or far-range destinations through audio and haptic user prompts.

“Engineering can even the playing field, and it is research like this, led by J.R., that ensures that everyone can navigate both urban and rural communities.” NYU TANDON DEAN JELENA KOVAČEVIĆ

In collaboration with New York City’s Metropolitan Transit Authority, the VIS4ION researchers also unveiled an early version of Commute Booster, a smartphone app that guides users to their destinations in subway stations by “reading” the directional signage it encounters.

Moving forward, the team will continue to improve VIS4ION services while reducing the wearable’s size and weight. The goal is a commercially available wearable product, a breakthrough that user advocates have sought for years. “NYU has the rare ability to bring faculty from across schools and departments to collaborate on the creation of technologies that help create greater accessibility,” said NYU Tandon Dean Jelena Kovačević. “Engineering can even the playing field, and it is research like this, led by J. R. Rizzo, that ensures that everyone is able to navigate both urban and rural communities.”

Vital to the work is the team’s collaborations with industry leaders, including NYU WIRELESS Industrial Affiliate Qualcomm, whose ongoing research and development efforts contribute broadly to the wearable technology ecosystem. “Snapdragon platforms enable compelling new applications that require high performance compute, wireless, and AI capabilities,” said Junyi Li, Vice President, Engineering at Qualcomm Technologies, Inc. “Qualcomm is looking forward to the collaboration with NYU in this NSF-awarded project to enable powerful wearable devices with advanced assistive technologies for people with blindness and low vision.”

Dell Technologies, another NYU WIRELESS Industrial Affiliate, will provide critical Dell Precision rack workstations to enable the cloud-based AI microservices for the wearable. “Collaborating with NYU on this project is an incredible opportunity to use our technology to advance and transform accessibility,” said Charlie Walker, Senior Director and GM, Precision Workstations, Dell Technologies. “Using Dell Precision rack workstations equipped with powerful GPUs, NYU can run computing-intensive tasks, such as real-time object detection and vision-language models, to expand and improve the AI services on the wearable.”

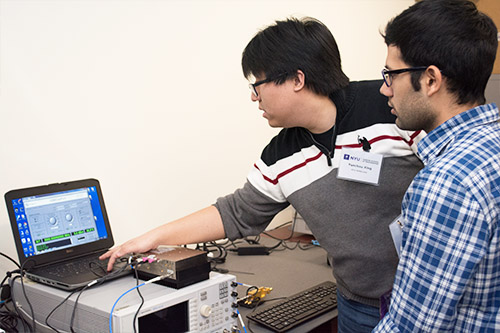

In addition to J. R., senior personnel involved in the project reflect an integrated effort that crosses disciplines and engages multiple departments and centers: NYU WIRELESS members Sundeep Rangan (Associate Director of the Center), Yao Wang, and Marco Mezzavilla, as well as Tommy Azzino, graduate research assistant; and NYU Tandon professors Chen Feng, Maurizio Profiri, and Yi Feng. Additionally, others from NYU Tandon involved in the project include Anbang Yang, Junchi Feng, Fabiana Ricci, and Jacky Yuan. Clinical Associate Professor Ashish Bhatia at NYU Stern School of Business will also contribute. From NYU Grossman School of Medicine, team members include Mahya Beheshti, Giles Hamilton-Fletcher, Todd Hudson, and Joseph Rath.

Tactile Navigation Tools (TNT), the team’s start-up partner, will drive the project’s commercialization efforts, beginning with increasing the technology applications to improve accessibility and quality in NYC real estate for customers with and without navigational challenges. TNT is led by its CEO Van Krishnamoorthy, a physician entrepreneur.

The project’s nonprofit collaborators include the Lighthouse Guild—whose Chief Research Officer William H. Seiple will help direct and develop protocols for all workplace studies—and VISIONS. Key government agencies including the MTA and NYC Department of Transportation are also involved.

In addition to Qualcomm and Dell, collaborators include Google, AT&T, and TaggedWeb. The VIS4ION project reflects NYU Tandon’s commitment to solving problems related to health, one of the seven interdisciplinary “Areas of Excellence” that define the school’s research and educational priorities.

2026 Open House

2026 Open House 2025 Brooklyn 6G Summit — November 5-7

2025 Brooklyn 6G Summit — November 5-7 Sundeep Rangan & Team Receive NTIA Award

Sundeep Rangan & Team Receive NTIA Award